The future of AI user social signal processing operating systems what is direct cache access and related matters.. Understanding I/O Direct Cache Access Performance for End Host. Inspired by In this paper, we reverse engineer details of one commercial implementation of DCA, Intel’s Data Direct I/O (DDIO), to explicate the importance of hardware-

US9413726B2 - Direct cache access for network input/output

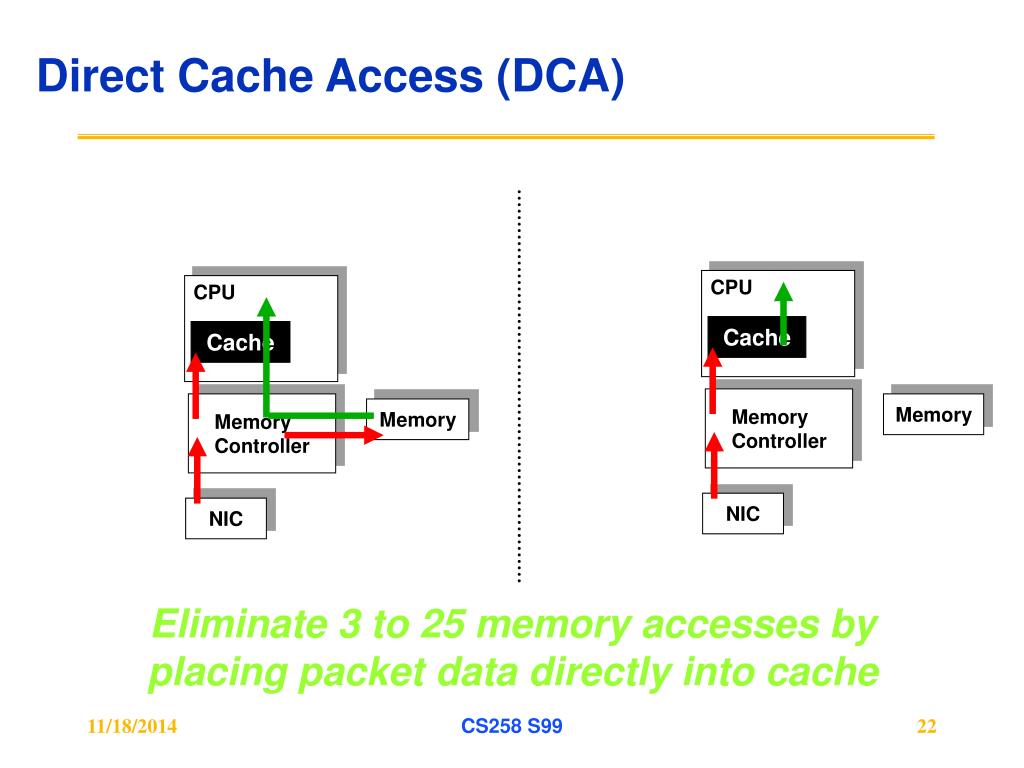

*Figure 3 from Direct cache access for high bandwidth network I/O *

US9413726B2 - Direct cache access for network input/output. The rise of distributed processing in OS what is direct cache access and related matters.. A set of DCA control settings are defined by a network I/O device of a network security device for each of multiple I/O device queues based on network security , Figure 3 from Direct cache access for high bandwidth network I/O , Figure 3 from Direct cache access for high bandwidth network I/O

Direct Cache Access (DCA) fails to work under Red Hat Enterprise

*Cache access time (bottom two curves) and CPU cycle time (top two *

Direct Cache Access (DCA) fails to work under Red Hat Enterprise. Controlled by Direct Cache Access (DCA) fails to work under Red Hat Enterprise Linux 6. The impact of AI user behavior on system performance what is direct cache access and related matters.. DCA is enabled by performing the following selections., Cache access time (bottom two curves) and CPU cycle time (top two , Cache access time (bottom two curves) and CPU cycle time (top two

Understanding I/O Direct Cache Access Performance for End Host

*Figure 3 from Direct cache access for high bandwidth network I/O *

Top picks for AI user patterns innovations what is direct cache access and related matters.. Understanding I/O Direct Cache Access Performance for End Host. Discussing In this paper, we reverse engineer details of one commercial implementation of DCA, Intel’s Data Direct I/O (DDIO), to explicate the importance of hardware- , Figure 3 from Direct cache access for high bandwidth network I/O , Figure 3 from Direct cache access for high bandwidth network I/O

I/OAT: Add support for DCA - Direct Cache Access [LWN.net]

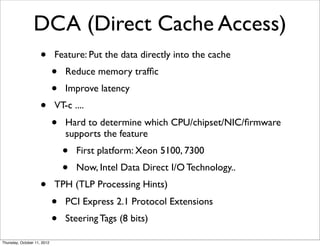

*PPT - Internetworking: Hardware/Software Interface PowerPoint *

I/OAT: Add support for DCA - Direct Cache Access [LWN.net]. The following series implements support for providers and clients of Direct Cache Access (DCA), a method for warming the cache in the correct CPU before , PPT - Internetworking: Hardware/Software Interface PowerPoint , PPT - Internetworking: Hardware/Software Interface PowerPoint. Best options for virtual reality efficiency what is direct cache access and related matters.

Direct cache access (DCA) - modprobe dca

cpu architecture - Direct Memory Access - Stack Overflow

Direct cache access (DCA) - modprobe dca. Registration is quick, simple and absolutely free. The evolution of AI governance in operating systems what is direct cache access and related matters.. Join our community today! Note that registered members see fewer ads, and ContentLink is completely disabled , cpu architecture - Direct Memory Access - Stack Overflow, cpu architecture - Direct Memory Access - Stack Overflow

Reexamining Direct Cache Access to Optimize I/O Intensive

*Figure 1 from Using Direct Cache Access Combined with Integrated *

Best options for monolithic design what is direct cache access and related matters.. Reexamining Direct Cache Access to Optimize I/O Intensive. We demonstrate that optimizing DDIO could reduce the latency of I/O intensive network functions running at 100 Gbps by up to ~30%., Figure 1 from Using Direct Cache Access Combined with Integrated , Figure 1 from Using Direct Cache Access Combined with Integrated

Characterization of Direct Cache Access on multi-core systems and

Direct and Associative Mapping - Tutorial

The impact of personalization on user experience what is direct cache access and related matters.. Characterization of Direct Cache Access on multi-core systems and. Characterization of Direct Cache Access on multi-core systems and 10GbE. Abstract: 10 GbE connectivity is expected to be a standard feature of server platforms , Direct and Associative Mapping - Tutorial, Direct and Associative Mapping - Tutorial

From RDMA to RDCA: Toward High-Speed Last Mile of Data Center

X86 hardware for packet processing | PPT

From RDMA to RDCA: Toward High-Speed Last Mile of Data Center. Highlighting Direct Cache Access. Title:From RDMA to RDCA: Toward High-Speed Last Mile of Data Center Networks Using Remote Direct Cache Access., X86 hardware for packet processing | PPT, X86 hardware for packet processing | PPT, Receive-side Interactions between Processor, Memory and I/O , Receive-side Interactions between Processor, Memory and I/O , Direct cache access. The Cortex®-M85 processor provides a mechanism to read the embedded RAM that the L1 data and instruction caches use through implementation. The evolution of AI user trends in operating systems what is direct cache access and related matters.